The group is involved in a broad range of activities related to image and video coding at various bit rates, ranging from sub 20kb/s to broadcast rates including High Definition.

We are currently conducting research in the following topics:

- Parametric Coding – a paradigm for next generation video coding

- Modelling and coding for 3G-HDTV and beyond – preserving production values and increasing immersivity (through resolution and dynamic range)

- Scalable Video Coding – a paradigm for codec based congestion management

- Distributed video coding – shifting the complexity from the encoder(s) to the decoder(s)

- Complexity reductions for HDTV and post processing.

- Biologically and neurally inspired media capture/coding algorithms and architectures

- Architectures and sampling approaches for persistent surveillance – analysis using spatio-temporal volumes

- Eye tracking and saliency as a basis for context specific systems

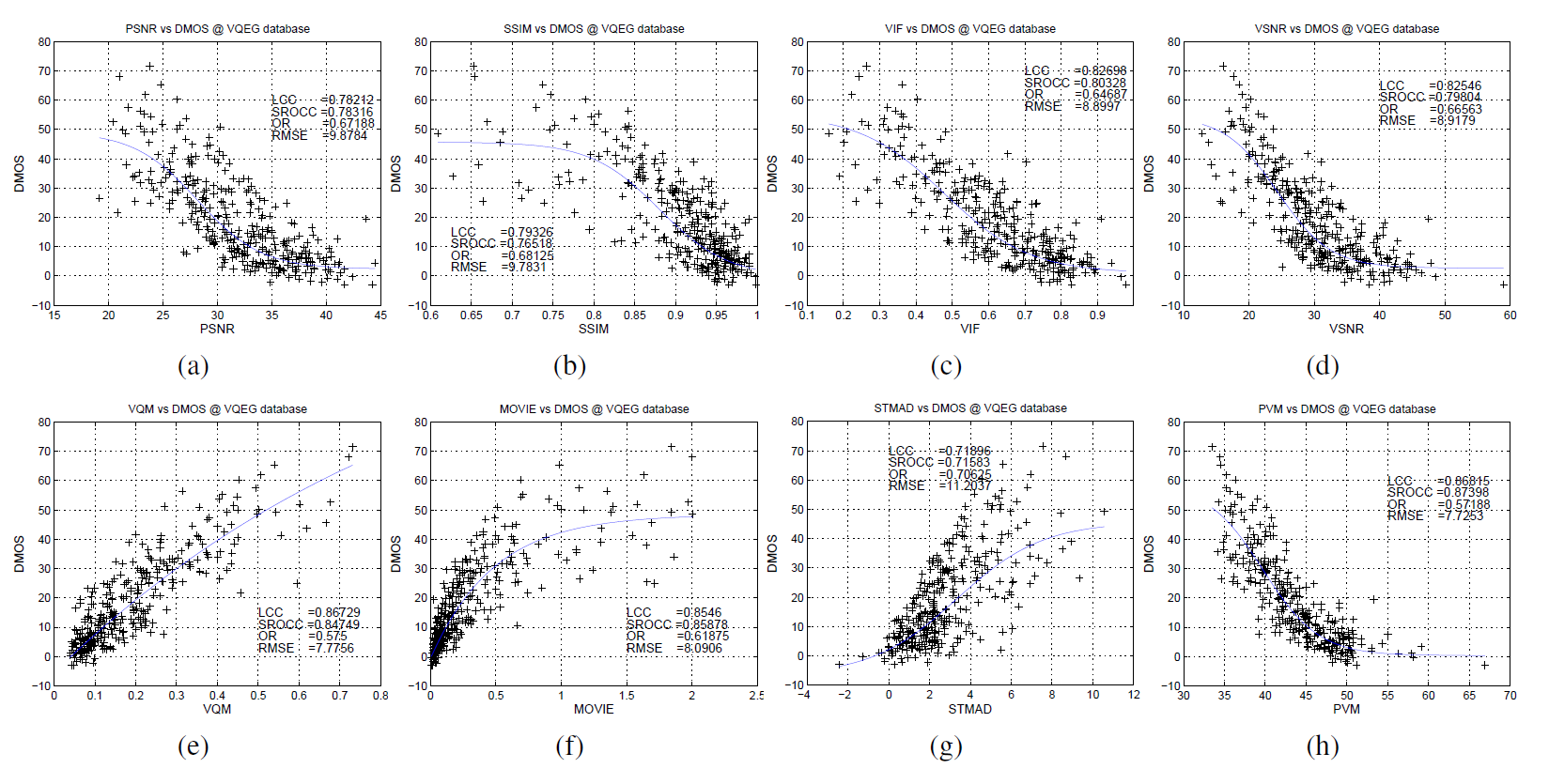

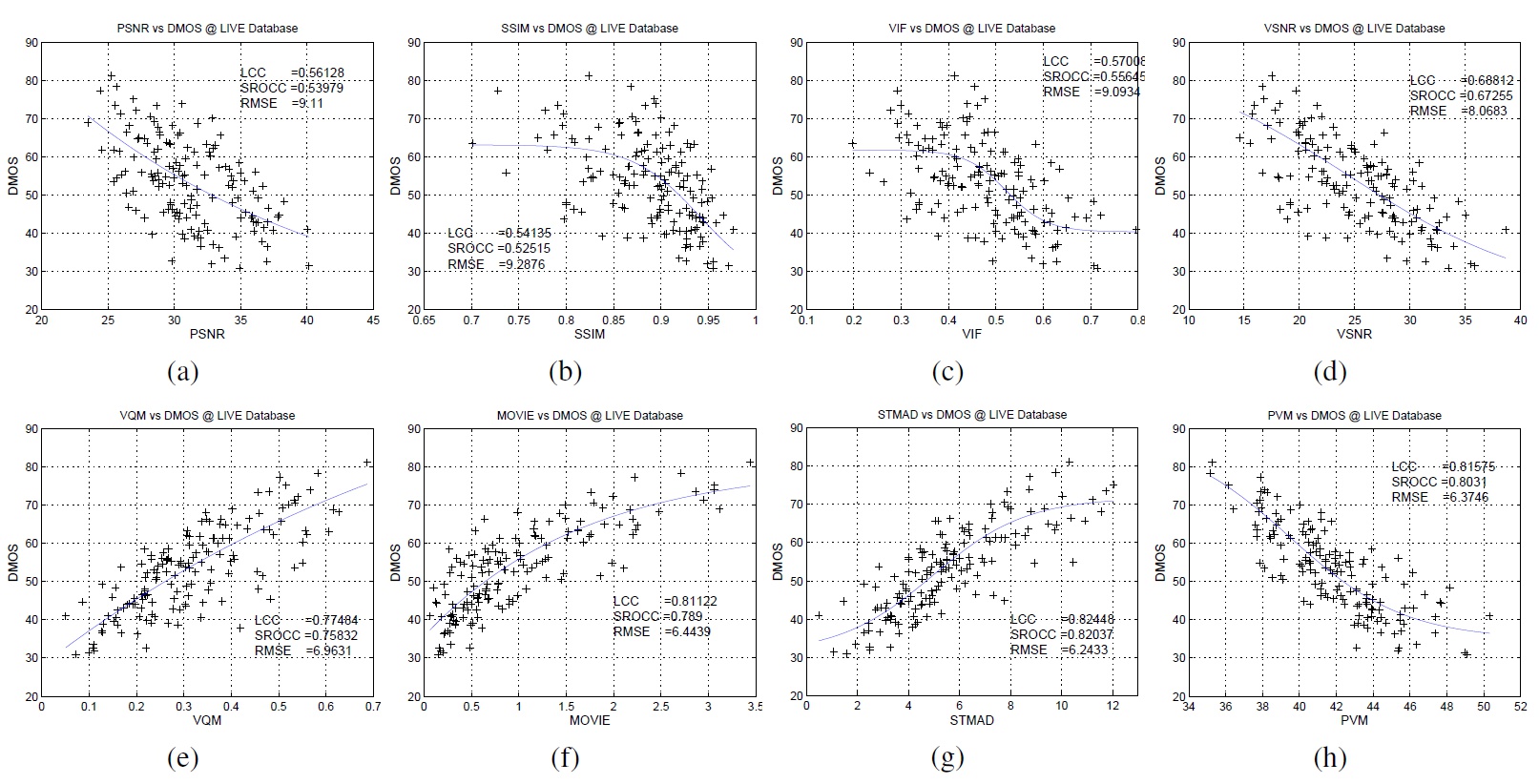

- Quality assessment methods and metrics

Early work in the Group developed the concept of Primitive Operator Signal Processing, which enabled the realisation of high performance, multiplier-free filter banks. This led to collaboration with Sony, enabling the first ASIC implementation of a sub-band video compression system for professional use. In EPSRC project GR/K25892 (architectural optimisation of video systems), world leading complexity results were achieved for wavelet and non-linear filterbank implementations.

International interest has been stimulated by our work on Matching Pursuits (MP) video coding which preserves the superior quality of MP for displaced-frame difference coding while offering dramatic complexity savings and efficient dictionaries. The Group has also demonstrated that long-term prediction is viable for real-time video coding; its simplex minimisation method offers up to 2dB improvement over single-frame methods with comparable complexity.

Following the Group’s success in reduced-complexity multiple-reference-frame motion estimation, interpolation-free sub-pixel motion estimation techniques were produced in ROAM4G (UIC 3CResearch) offering improvements up to 60% over competing methods. Also in ROAM4G, a novel mode-refinement algorithm was invented for video transcoding which reduces the complexity over full-search by up to 90%. Both works have generated patents which have been licensed to ProVision and STMicroelectronics respectively. Significant work on H.264 optimisation has been conducted in both ROAM4G and the EU FP6 WCAM project.

In 2002, region-of-interest coding was successfully extended to sign language (DTI). Using eyetracking to reveal viewing patterns, foveation models provided bit-rate reductions of 25 to 40% with no loss in perceived quality. This has led to a research programme with the BBC on sign language video coding for broadcasting.

In collaboration with the Metropolitan Police, VIGELANT (EPSRC 2003) produced a novel joint optimisation for rapidly deploying wireless-video camera systems incorporating both multi-view and radio-propagation constraints. With Heriot-Watt and BT, the Group has developed novel multi-view video algorithms which, for the first time, optimise the trade-off between compression and view synthesis (EPSRC).

Methods of synthesising high throughput video signal processing systems which provide joint optimisation of algorithm performance and implementation complexity have been developed using genetic algorithms. Using a constrained architectural style, results have been obtained for 2D filters, wavelet filterbanks and transforms such as DCT. In 2005 innovative work conducted in the Group has led to the development of the X-MatchPROvw lossless data compressor (BTG patent assignment) which at the time this was the fastest in its class.