Fan Zhang, Angeliki Katsenou, Mariana Afonso, Goce Dimitrov and David Bull

ABSTRACT

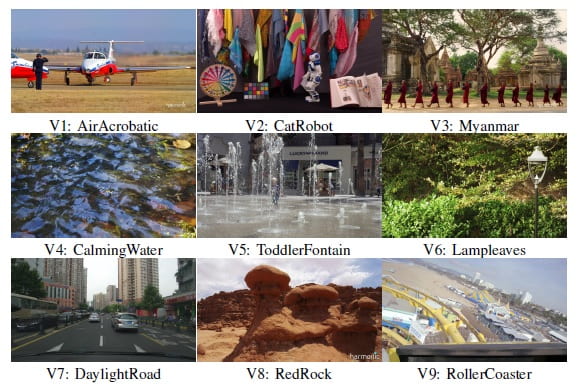

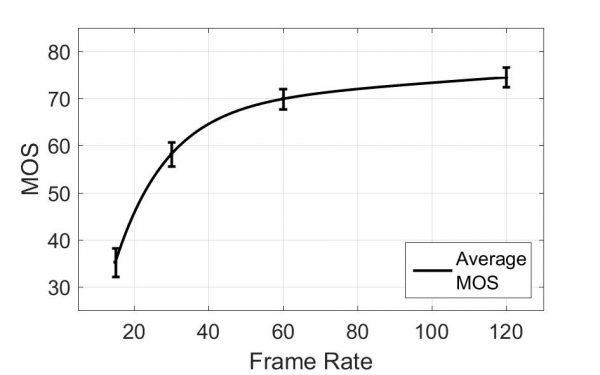

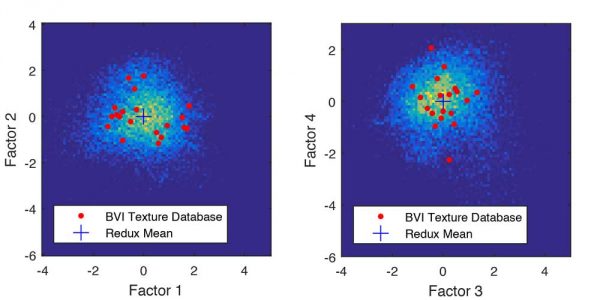

In this paper, the performance of three state-of-the-art video codecs: High Efficiency Video Coding (HEVC) Test Model (HM), AOMedia Video 1 (AV1) and Versatile Video Coding Test Model (VTM), are evaluated using both objective and subjective quality assessments. Nine source sequences were carefully selected to offer both diversity and representativeness, and different resolution versions were encoded by all three codecs at pre-defined target bitrates. The compression efficiency of the three codecs are evaluated using two commonly used objective quality metrics, PSNR and VMAF. The subjective quality of their reconstructed content is also evaluated through psychophysical experiments. Furthermore, HEVC and AV1 are compared within a dynamic optimization framework (convex hull rate-distortion optimization) across resolutions with a wider bitrate, using both objective and subjective evaluations. Finally the computational complexities of three tested codecs are compared. The subjective assessments indicate that, for the tested versions there is no significant difference between AV1 and HM, while the tested VTM version shows significant enhancements. The selected source sequences, compressed video content and associated subjective data are available online, offering a resource for compression performance evaluation and objective video quality assessment.

Parts of this work have been presented in the IEEE International Conference on Image Processing (ICIP) 2019 in Taipei and in the Alliance for Open Media (AOM) Symposium 2019 in San Francisco.

SOURCE SEQUENCES

DATABASE

[DOWNLOAD] subjective data.

[DOWNLOAD] all videos from University of Bristol Research Data Storage Facility.

If this content has been mentioned in a research publication, please give credit to the University of Bristol, by referencing the following paper: